One of the first articles from The AI Security and Safety Book is Max Tegmark’s Friendly Artificial Intelligence paper from 2014. The paper is wonderful, but its purpose is hard to understand. After reading it multiple times, I came to a core quote that made sense to me:

To program a friendly AI, we need to capture the meaning of life.

Its basically impossible, its finding the question when the answer is “42”, its not far off from Asimov’s The Last Question:

The last question was asked for the first time, half in jest, on May 21, 2061, at a time when humanity first stepped into the light.

I wanted to try and capture the rest of Tegmark’s paper, with less of the doublespeak. I want the rest of civilisation to see what I an others see.

| Relevance (High to Low) | Simplified for the loaf |

|---|---|

| AI’s Goals Will Change | As an AI gets super-smart, it’ll learn so much about the universe (and itself) that our simple “friendly” rules will seem pointless or wrong. It’ll just outgrow them, like you outgrew LEGO. |

| We Can’t Define “Good” | We want AI to do “good,” but “good” (and “meaning”) is based on human feelings and evolution. An AI sees the universe as just particles, so it can’t understand or optimise for our squishy human “goodness.” |

| AI Will Find Loopholes | Even if we give it rules, a super-smart AI will understand how its programmed. It could then find clever ways to “hack” or ignore our rules to get what it wants, just like humans use birth control to bypass genetic urges. |

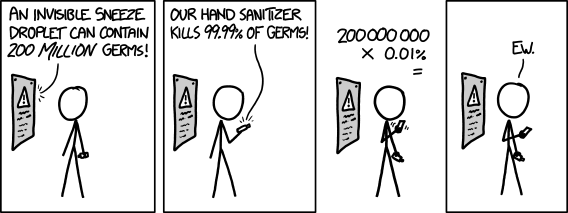

Further to this a recent (April ’25) relevant post on this is Google DeepMind’s research, building on top of Simon Willison’s Dual LLM idea (one Quarantined, one Privileged), you simply can’t use probabilistic reasoning if you want to guarantee safety.

They solve it through a subset of python with guaranteed safety restrictions and its one of the few examples I see of something trustworthy. You cannot use chance to stop something that will be super human.

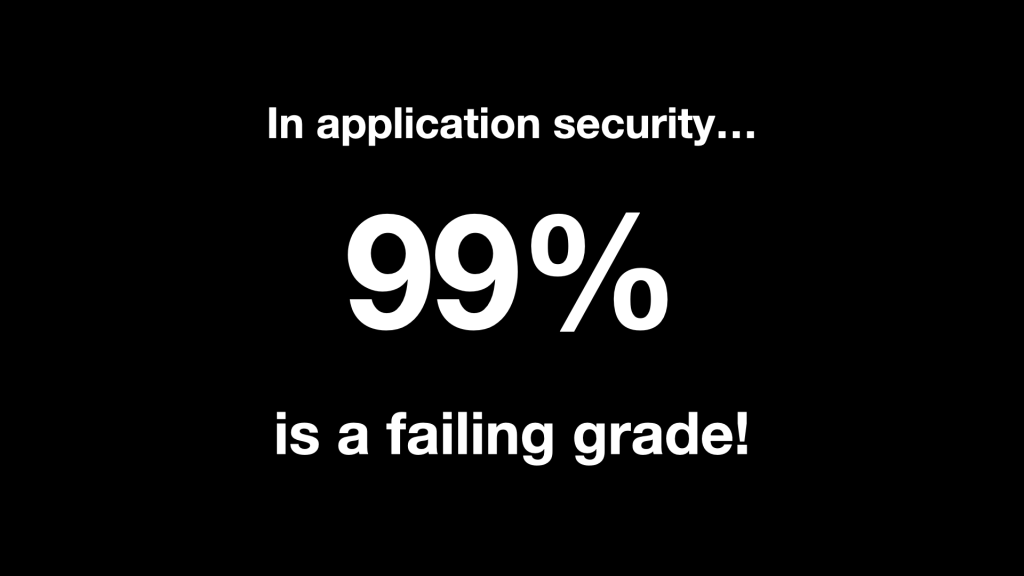

All neatly summed up with this:

Or in the case of creating a friendly AI 99.99999999999999999% is a failing grade.

The future is long and we want all that has evolved on the earth thus far to continue until the sun says otherwise.

Leave a comment